Seil Kang

Short Bio

I am Ph.D. student in Computer Science at Yonsei University.

My research focuses on investigating the behavior and inner workings of large-scale multimodal transformers, with an emphasis on interpretability-driven model improvement and alignment in systems such as Large Vision-Language Models (LVLMs) and Diffusion Transformers (DiTs).

Furthermore, I am also interested in research that analyzes and develops novel user experiences through the latest multimodal transformer science and engineering.

News

- [Sep. 2025] The paper Interpreting Attention Heads for Image-to-Text Information Flow in Large Vision-Language Models is accepted by NeurIPS 2025 Mechanistic Interpretability Workshop as a spotlight paper!

- [Sep. 2025] The paper Rare Text Semantics Were Always There in Your Diffusion Transformer is accepted by NeurIPS 2025!

- [Aug. 2025] Honored with the 2nd prize 2025 at AI Graduate School Symposium, hosted by South Korea's Ministry of Science and ICT!

- [Feb. 2025] The paper Your Large Vision-Language Model Only Needs A Few Attention Heads For Visual Grounding was selected as a highlight at CVPR 2025! (acceptance rate = 3.7%)

- [Feb. 2025] The paper Your Large Vision-Language Model Only Needs A Few Attention Heads For Visual Grounding is accepted by CVPR 2025!

- [Feb. 2025] The paper See What You Are Told: Visual Attention Sink in Large Multimodal Models is accepted by ICLR 2025!

-

Show more

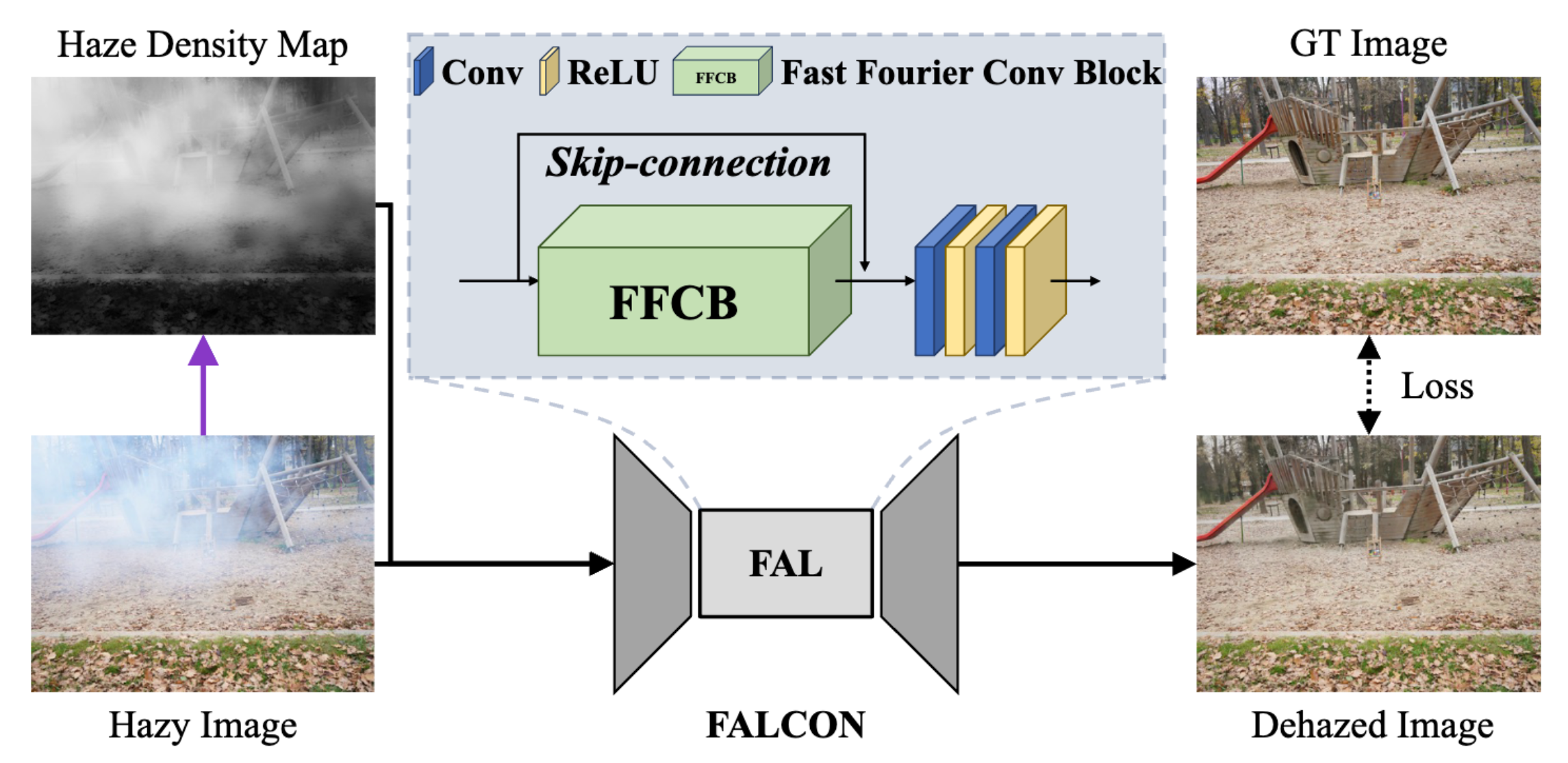

- [Jul. 2024] The paper Falcon is released!

- [Mar. 2024] The paper WoLF is released!

- [Mar. 2024] The paper CoBra is released!

- [Nov. 2023] I have transitioned from a M.S. program to an Ph.D. program.

- [Mar. 2023] I got admitted at Yonsei College of Computing as a M.S. student. I will continue doing research at MICV Lab.

Publications [ Google Scholar]

-

Pre-Print

Pre-Print

-

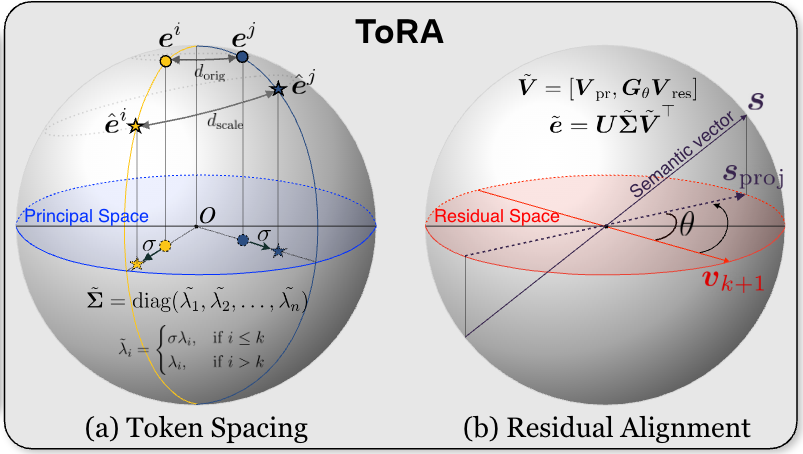

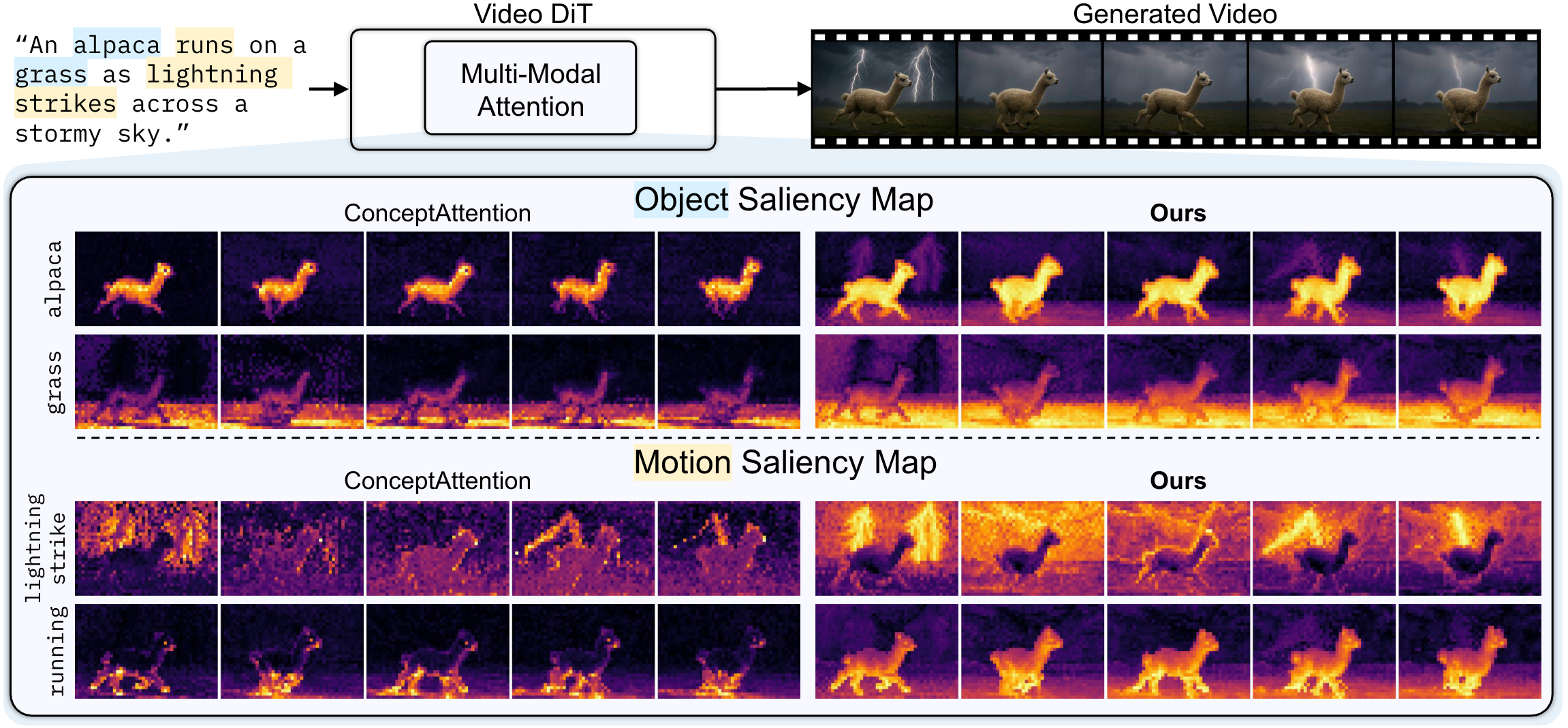

NeurIPS 2025

NeurIPS 2025

-

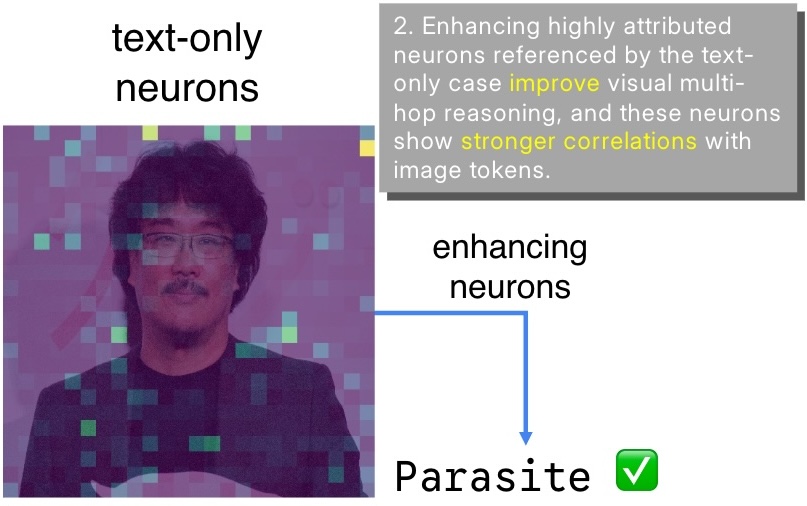

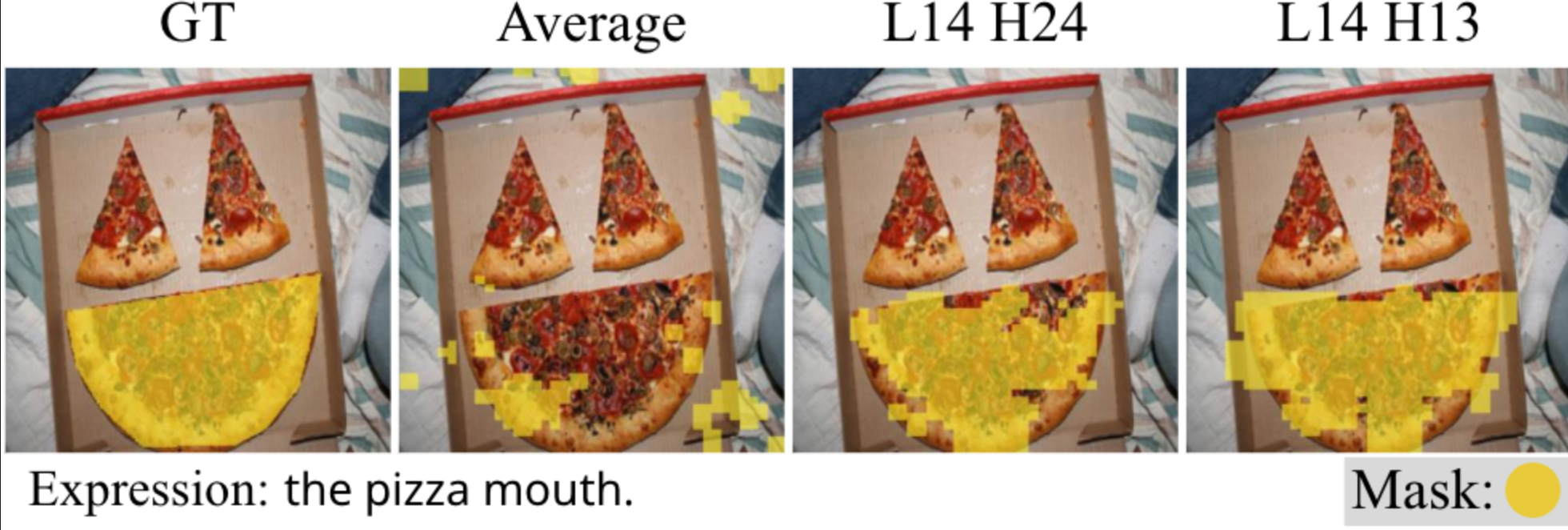

NeurIPS 2025 W

NeurIPS 2025 Mechanistic Interpretability Workshop (Spotlight, <13%)

NeurIPS 2025 W

NeurIPS 2025 Mechanistic Interpretability Workshop (Spotlight, <13%) -

Technical Report

Technical Report

-

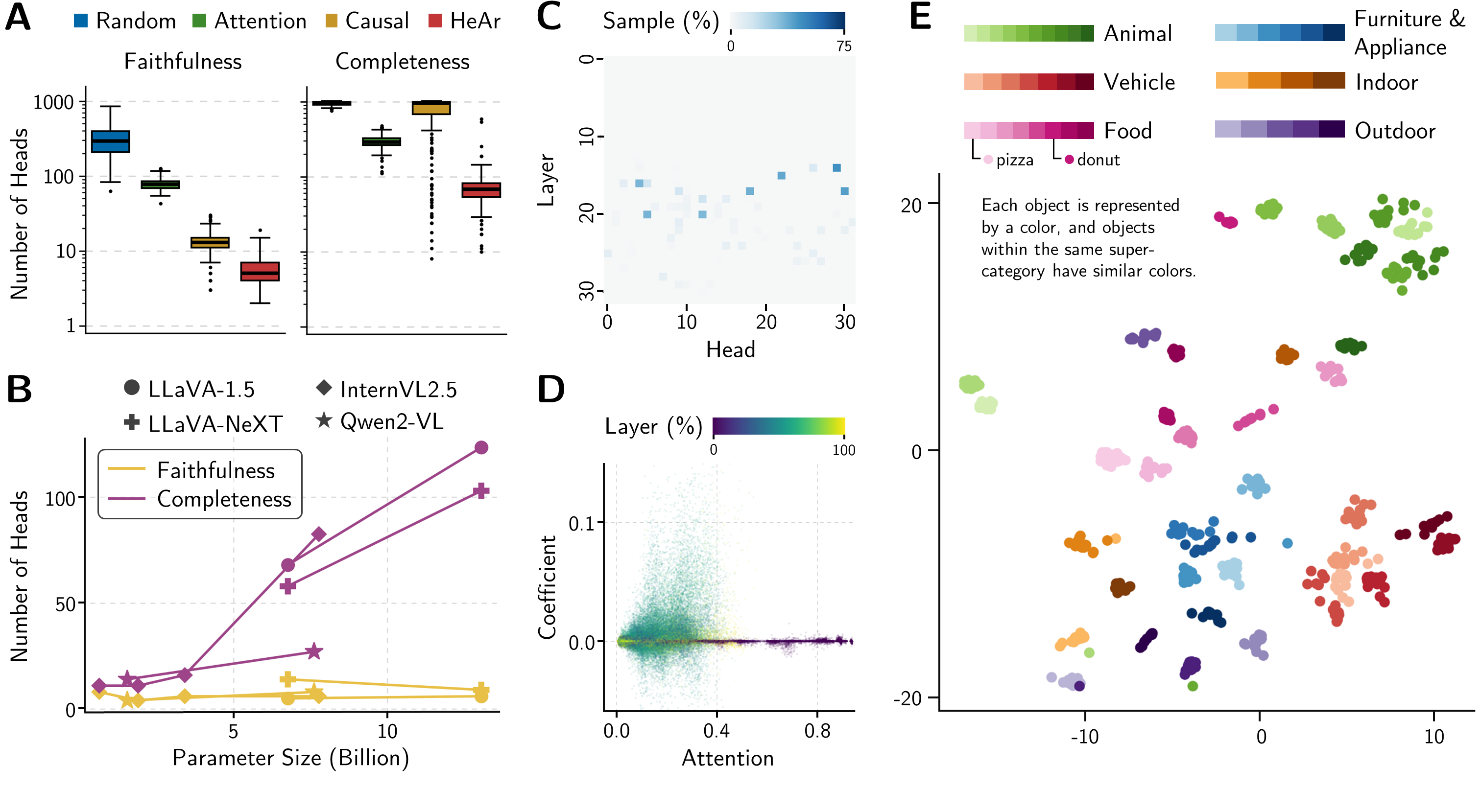

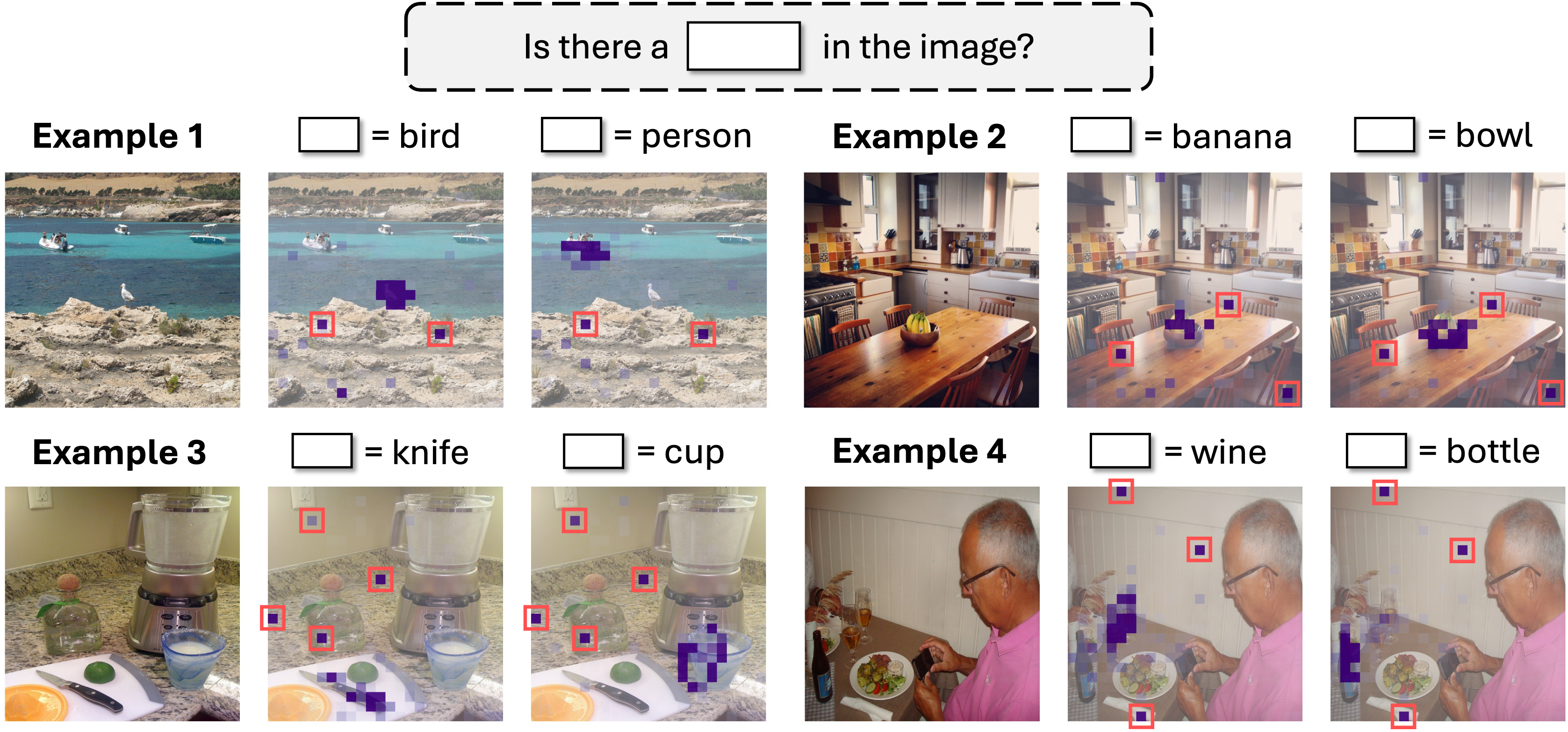

CVPR 2025

CVPR 2025 (Highlight, <3%)

CVPR 2025

CVPR 2025 (Highlight, <3%) -

ICLR 2025

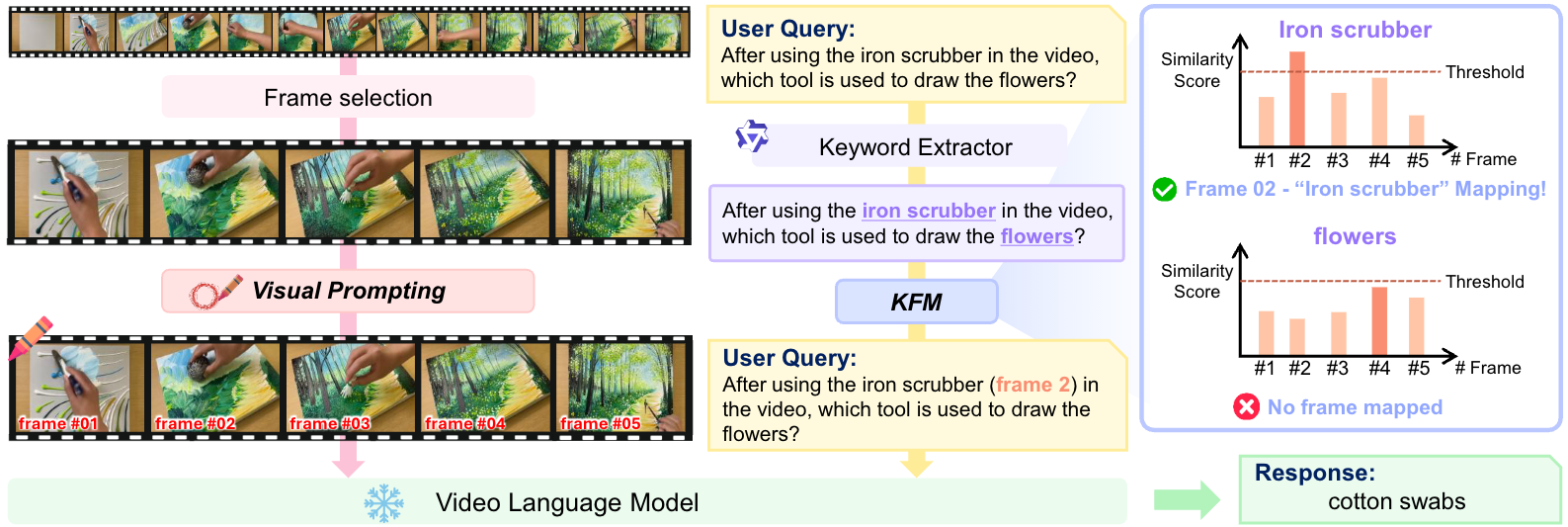

ICLR 2025

-

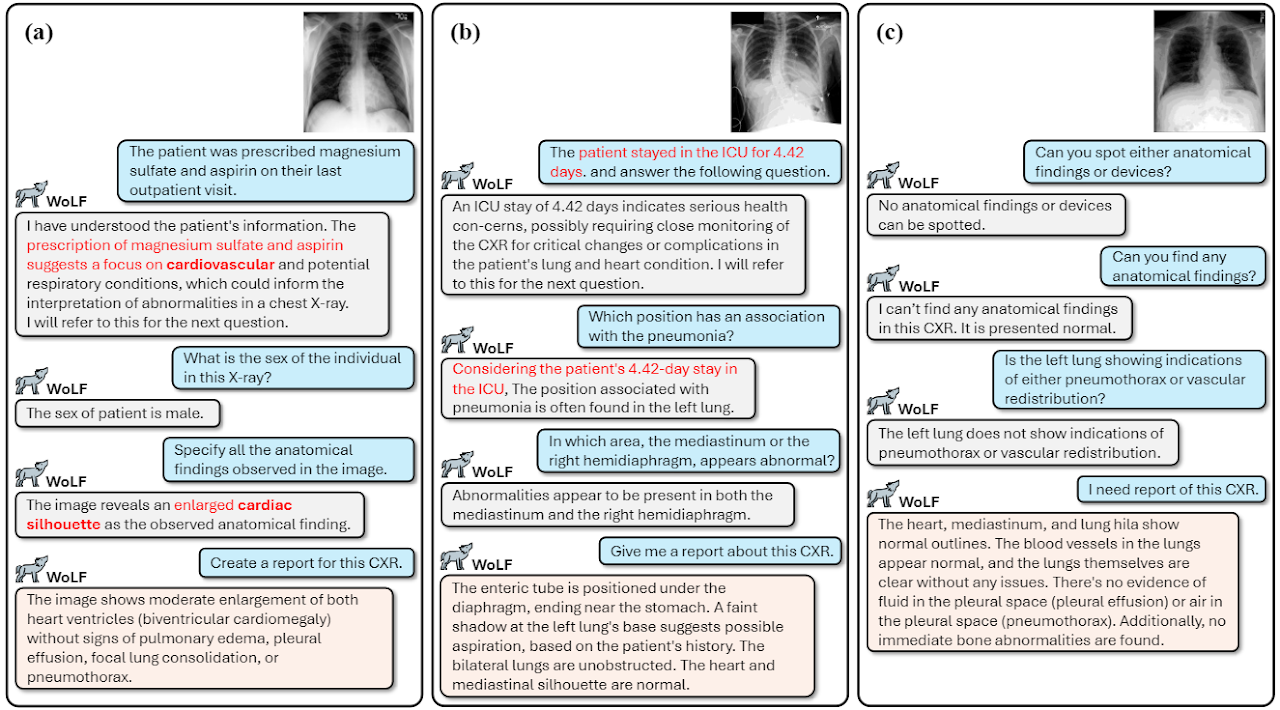

Technical Report

Technical Report

Pre-Print

Pre-Print

CVPRW 2025

CVPRW 2025

PR 2024

PR 2024